Current Research Interests of Prof. Keshab K. Parhi

My current research spans four areas: energy-efficient artificial intelligence (AI), homomorphic encryption for the cloud, quantum codes and quantum fault tolerance, and neuroengineering/brain signal processing. I am currently exploring all aspects of energy-efficient architectures for AI models and agents, for both inference and training applications. I am also working on hardware accelerators for homomorphic encryption. Another area of research addresses quantum codes and quantum fault tolerance. While it is well known Granger causality is not well suited in neuroscience applications, what causality is well suited remains unknown. There is a need for developing a causality model that is more appropriate. I am exploring use of causal brain network models and new modeling approaches for improving lives of subjects with epilepsy. My ongoing research efforts in these four problems are outlined below.

Energy-efficient Artificial Intelligence (AI) Models and Agents

Current research is directed towards design of not only energy-efficient models, but also of AI agents, based on novel algorithm-architecture codesign approaches. For our proposed vision of energy-efficient small domain-specific models that are hallucinatin-free, please refer to arXiv preprint.

The development of the field of AI has evolved over the last 80 years, see our review paper in OJCAS 2020. Since 2012, the field of AI has taken a tight hold of broad aspects of society, industry, business, and governance in ways that dictate the prosperity and might of the world’s economies. The current state of AI is akin to the early stages of the Internet that saw increase in the number of users from 15 million in 1995 to 5+ billion in 2024. AI today is where the internet was in 1998-1999 (150-200 million users). The AI market size is similarly projected to grow from $189 billion in 2023 to $4.8 trillion by 2033. Currently, the field of AI is dominated by large language models (LLMs) and vision language models (VLMs) that have engendered a new era of AI agents that are typically designed by fine-tuning foundation LLMs/VLMs. In 2017, the evolution of transformers was a major advancement of AI. Transformers combined with reinforcement learning led to ChatGPT that revolutionized the world in November 2022.

Between 2013 and 2019, the compute complexity of training AI systems from AlexNet to AlphaGo Zero increased by 300,000×, doubling every two months, with a large carbon footprint. Training of GPT-3 consumed 1.287 GWh of energy. Clearly, LLM scaling faces an “energy consumption wall” (in comparison, the human brain consumes only 20W of power). Typical approaches to reduction of energy include: sparsity at various levels ranging from neurons to filters to channels, model reduction by low rank approximation (LoRA), tensor decomposition, and quantization. Recent advances in Deepseek-R1 have demonstrated the feasibility of high model accuracy with limited energy consumption via sparsity, quantization, optimized use of GPU instructions, selection of mixture-of-experts, and reinforcement learning.

In our past work, we have proposed a novel regular sparsity approach referred to as permuted diagonal network (PermDNN) [Micro 2018], InterGrad: a gradient interleaving scheme to reduce memory access and energy consumption by factor of 2 [TCAS I 2023], and LayerPipe: an approach to distributed processing of neural networks that reduces underutilization while mapping training to multiple systolic arrays [ICCAD 2021] [Asilomar 2025]. Another research direction has addressed model reduction via tensor decomposition [CAS Magazine, 2023].

Privacy-Preserving Computing: Homomorphic Encryption

Among various technologies of privacy-preserving machine learning that are aimed at protecting user-data privacy in cloud machine learning applications, fully homomorphic encryption (FHE) based methods allow computations to be performed on encrypted data, while no information of the original data is leaked. This project develops efficient and scalable hardware architectures for privacy-preserving neural network (NN) inference based on ciphertext-ciphertext FHE. As opposed to prior works where weights of the NNs are represented using plaintext polynomials, this project takes the first step towards ciphertext-ciphertext FHE that preserves the privacy of both the user and model providers. We will leverage scheme switching to use arithmetic-based schemes for linear functions and Boolean logic-based schemes for non-linear functions for accelerating the NN computations. The research objective of this project is to improve the hardware efficiency of ciphertext-ciphertext FHE-based NN inference with by orders of magnitude.

Past work has proposed architectures for computing in the cloud using less latency based on number theoretic transform for polynomial modular multiplication for homomorphic encryption

[TIFS 2024]

[TVLSI 2025].

We have also proposed solutions to the same problem in the time-domain based fast filter algorithms

[Trans. Computers 2023].

Current work is directed towards a system solution for FHE for schemes such as CKKS or BFV/BGV using building blocks designed in our prior work.

Quantum Codes, Circuit Optimization, and Quantum Fault Tolerance

Quantum computers have the potential toward solving problems related to cybersecurity, drug development, traffic optimization, weather forecasting, financial modeling etc., transforming the state-of-the-art classical computing and communications. In the long term, we expect the quantum gates to be more accurate and the quantum computer to be fault-tolerant. However, currently available noisy intermediate-scale quantum (NISQ) computers cannot achieve quantum supremacy as the number of qubits is in the range of hundreds and these systems are not fault tolerant. Commonly used quantum codes, such as surface codes, are not well suited for meaningful computation as these achieve very low code rates. Design of quantum error control codes (ECC) and their encoder-decoder circuits that can achieve high code rate and can correct errors is of great interest. While several quantum codes have been presented in the literature, significantly less research has been devoted to their realizations in quantum computers. How these codes can be mapped to quantum gates for encoding and decoding, and how these quantum circuits can be designed so that they satisfy the near neighbor constraint is not well understood.

Our past research has led to new quantum circuit optimization approaches to reduce the number of quantum gates in quantum error correcting codes [TCAS-I 2024] [CAS Magazine 2024]. Current research is directed towards circuit optimizations for high-rate quantum codes such as quantum low-density parity check (LDPC) codes, optimization for non-binary codes such as based on qutrits, and quantum fault tolerance.

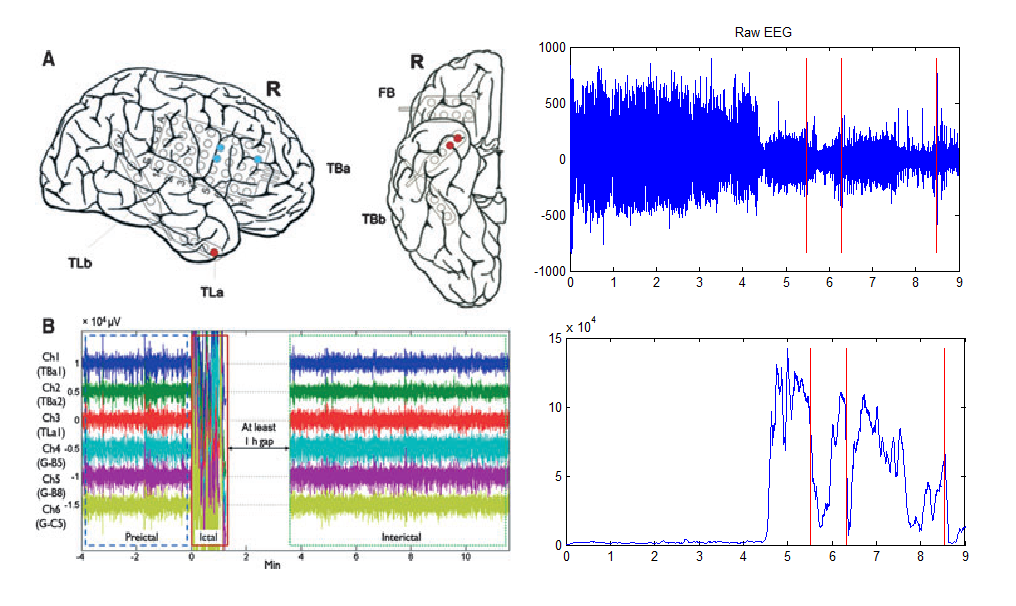

Seizure Prediction, Detection, and Onset Zone Identification from EEG/iEEG

Epilepsy is the second most common neurological disorder, which affects 0.8% of people in the world. Approximately 70% of the patients with epilepsy achieve partial or sufficient control over seizures from medication or resective surgery. However, for the remaining 30% of the patients, no treatment is currently available. If there is a way to predict occurrence of a seizure, it could sufficiently enhance the therapeutic possibilities, leading to a better quality of life of the patients.

The general goal of this project is to propose a patient-specific algorithm, which can predict occurrences of an epileptic seizure in advance. Specifically, our research is directed towards developing an algorithm to classify intra-cranial electroencephalogram (iEEG) signals before a seizure onset from those during ordinary conditions with high sensitivity and a low false positive rate. Our past approaches include use of spectral power [Epilepsia 2011] and use of ratio of band power as a novel biomarker [TBioCAS 2016].

Most of the past research has been based on short-term iEEG recordings. Seizure prediction and detection for long-term recordings are challenging problems due to the non-stationarity of the brain signals where the models vary from week to week or month to month, see [TBioCAS 2019].

Current research is directed towards long-term seizure prediction using a novel multi-model approach where different seizures can be grouped into different models, as opposed to a single model approach used in the current literature. For example see our recent paper in [ JNE 2025].

In another research direction, we have developed an approach to localize seizure onset zone based on a new network metric that exploits causality [JNE 2024] [Nature Scientific Reports 2025]. The new causality metric, referred as frequency-domain convergent cross mapping (FDCCM), was developed in my Lab [TBME 2023]. Current research is directed towards generalization of this approach, and towards localization of seizure onset zone from scalp EEG. Collecting iEEG in a clinic is an invasive procedure. Collecting scalp EEG is non-invasive. Success in this problem would be significant, but this is a challenging problem.